Generative AI and cultural biases

This workshop was centered on leveraging generative artificial intelligence (GenAI) services in innovative ways, specifically focusing on text-to-image (TTI) tools such as DALL-E, MidJourney, and Stable Diffusion. TTI models are generative AI models that transform users text input (prompts) into images. The core idea here was to design a workflow for participants in order to collaboratively generate prompts and images on a given topic. This activity aimed to foster awareness of individual thought processes and biases related to the topic, encouraging participants to reflect on their perspectives and knowledge. By analyzing the keywords chosen to describe visuals for generation, (e.g. “the stereotypical Italian migrant”) the workshop integrates discussions about the topic itself with self-reflection on personal assumptions and expectations as well as insight in how the GenAI models rendered these keywords into visuals. The workshop was tested with the students of the Bachelor’s course Multilingualism: Language and Languages in a World Full of Differences.

Background information

The challenge first of all, is making users understand how AI models, in this context, TTI models operate. It is important to be aware that these models have human bias embedded, and that they have the capacity to blow such biases out of size.

The second challenge is to use such biases in a positive manner. AI bias is a topic that is much discussed in the literature, and many mitigation strategies are operationalized. However, these strategies currently address specific topics (for example bias against age, race, gender, religion, etc.) but not render that the bias can/does go beyond that. Since the current GenAI models use a natural way of communicating, these biases slip the attention of the users and go unrecognized, or worse, are used to persuade the users.

The goal of the workshop is to show both user’s biases, as well as AI’s biases, and gave space to students to reflect about the reasons where these biases comes from, in which context it might become dangerous to users/vulnerable groups, and how/if such bias could be put in a different light.

This workshop could be a handy tool to create awareness and a discussion space for those who are interested in learning/discussing AI and human bias, and how it effects decision making.

Please find the workshop and workflow set-up here: Workshop template.

Project description

The workshop and workflow design were based on the research of PhD student, Vera van der Berg. She developed and tested a workflow for self-reflection with an image analysis model for designers, and design students. To trigger self-reflection, the design students completed the following steps:

- The students were tasked to create an artwork, and prepare for it by bringing in images for inspiration.

- Annotated images are essential in the preparation of image analysis models, the data usually comes from alt-text2 of internet images. Here, we asked the students to create their own training dataset, using custom (personal) labels. They did so as follows. First, they were asked to find interesting keywords to describe these images. These keywords were used for annotation of the images.

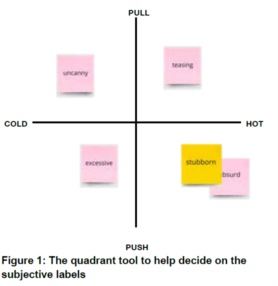

- We asked the students to choose at most 4 labels for annotation. We furthermore provided them with a ‘quadrant tool’ to help them choose their annotations. The tool features a simple but powerful structure with a `push/pull’ x-axis and a `warm/cold’ y-axis.

These di mensions are intentionally left somewhat undefined, inviting participants to determine what ” push/pull’’ and ”warm/cold’’ mean in relation to their specific topic of interest. This open-ended framing encourages reflective questions like ”Does this element in the image pull me in or push me away?’’ and “ Does this element feel ”warm” or ”cold”?”’ (see Figure 1)

mensions are intentionally left somewhat undefined, inviting participants to determine what ” push/pull’’ and ”warm/cold’’ mean in relation to their specific topic of interest. This open-ended framing encourages reflective questions like ”Does this element in the image pull me in or push me away?’’ and “ Does this element feel ”warm” or ”cold”?”’ (see Figure 1) - Each image needed to be annotated by hand, which is a thought provoking and slow task. Students spend a day for the annotating their images, and reported how the task made them to be more aware of the labels and their choices. Some of them decided to change their labels and start the annotation task a new, some accepted that the semantic/emotional meaning of their labels have shifted through the annotation, but decided to pay the penalty for it, and kept images with the same labels, where the labels connote different ideas.

- The students created their artworks and asked the trained AI model to analyze their artworks. ere were several reflective activities throughout the workshop. The students were asked to reflect on their choices of images, as well as their choices of annotation labels. During the annotation of labeling images with the keywords, they also engaged in a discussion. Their artworks were further assessed by the AI which generates a last layer of reflection. The students inevitably discussed their expectations from the AI model.

This workshop and its workflow were adjusted for the course Multilingualism: Language and Languages. The challenges in adjustments lay in three important points:

- The lack of designer perspective & skill set might trigger less self-reflection. Self-reflection is a much used design strategy, whereas students coming from other backgrounds will lack such discipline (i.e. to go over their decision making processes during a project in a reflective way). A shortcut would be to create a series of workshops, where first design thinking is introduced to students. Design Thinking as an approach to problem solving is a much used method, and students would benefit from learning this skill.

- Due to the lack of training a model, a crucial moment in self-reflection was eliminated in our workshop adoption. This decision was made due to time constraints. Being involved in the training renders the end user curious about how the model works, and how the training effected the model’s performance, creating expectations – which later on become personal points for reflection. To respond to this challenge, we used the shortcut of creating deliberate moments during the workshop where we asked student reflective questions about their prompt design, and the keywords they had used. For a better solution, currently, we are working on an interface to create a training session so that students can quickly generate their own personalized AI model for image generation.

- The lack of technical support and expertise that we have provided for the PhD. research might trigger a different experience and lack of engagement with TTI models, rendering the aim of the workshop futile.

The workshop setup was so that the students interaction with each other and with the TTI service were observed by Dr. Manuela Pinto, and the workshop organizers. Then, their feedback was collected verbally at the end of the workshop about the tasks themselves.

The feedback received from Dr. Manuela Pinto was that the workshop created discussion about cultural differences, as students generated images of “the stereotypical Italian migrant” – and that was the main aim. In that sense, the setup was successful.

However, the team also wanted to get an assessment of the interface students were using, and how/if they had experienced any setback due to not being familiar with these services. Unfortunately, the survey they ran after the workshop was collectively responded (each team filled-in a usability assessment scale, which is in principle prepared to be answered individually). Thus, they were not able to understand the impact of using the service itself, i.e. if they liked the image generation, or the exercise itself was not clearly documented.

Lessons learned

- What worked well: the discussions about how to prompt in order to create a specific visual. This step triggered discussions about expectations about what are the keywords used to describe who a stereotypical Italian migrant is.

- What did not work as expected: The generated visuals became the main focus, and the lack of capabilities of the model in translating a prompt to the expected image did not generate a discussion about the working of the model, but rather created a fixation of how to accomplish the task better. The reason is probably due to the task orientation: we gave the task of creating images, and not explicitly stated the aim of the workshop, i.e. that during the generation there should also be space to discuss. Though, during the workshop we made ample time to create such discussions via asking questions on how/why they had chosen these specific keywords for their prompts, and how much of those keywords they saw in the generated image. We also asked what kind of keywords they would use to describe the generated images.

- The original assignment was to collect images of Italian immigrants to create a similar discussion. We wanted to expand the discussion by creating images that showcase the AI training. However, the problematic points in the images were seen as shortcomings, not necessarily a cumulation of the training dataset, i.e. how such imagery is described in alt-text was not automatically visible. For context to other teachers who are not familiar with AI training for TTI models: many images shared on the internet have alt-text descriptions. These are the texts you will see when you hover over an image. The most common use of alt-text comes from an era where the speed of the internet connection was not high, and the users needed to wait until the images were downloaded. The alt-text were thus very context dependent and not necessarily had visual descriptions detailed enough. Also, it reflected what the authors of the alt text saw as important to emphasize in an image. Contextual bias, along cultural bias, would commonly be found in these descriptions. We thought that the current workshop could give a glimpse into these biases – but that did not happen on its own. If the aim is to do that, then a more elaborate mapping of this workshop needs to be prepared.

Take home message

- Think about the main reason why/how you want to use TTI as an assignment. In our case we tried to create several discussions (about one’s own bias, the AI bias, how a certain ethnic group is perceived in popular culture), and the multitude of aims became the main shortcoming of the exercise

- Use a service that you have good control of, or make the time to implement a controllable model, such as a Stable Diffusion – Lora that you can train and prepare for the exercise

- Make time for training the students on prompting for images, and how to fine-tune the resulting images

Central AI policy

All AI-related activities on this page must be implemented in line with Utrecht University’s central AI policy and ethical code.

Responsibility for appropriate tool choice, data protection, transparency, and assessment use remains with the instructor.